- December 13, 2025

-

-

Loading

Loading

The potential reach of artificial intelligence is deep — and can even penetrate the seemingly benign job applicant screening and hiring process. A process that, in many ways, screams for automation, especially in large organizations.

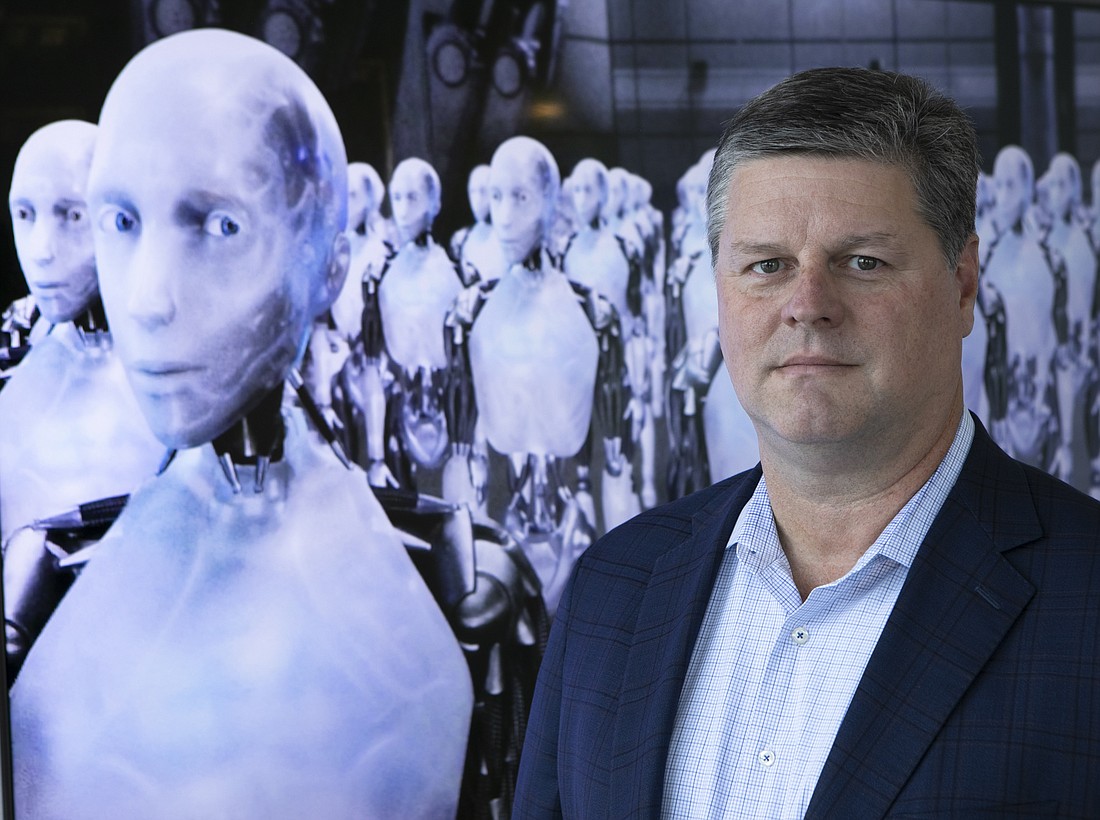

But Tampa attorney Kevin Johnson, an expert in employment law, says employers that use advanced AI-based tools to efficiently filter candidates for open positions should be aware of the legal risks. And those risks are plentiful.

“A lot of employers are starting to wake up to the potential of AI and trying to evaluate whether they should be using it in their workforce,” says Jackson, a partner at Johnson Jackson PLLC in Tampa. “There are already companies out there that are producing hiring applications that incorporate AI. But the question becomes, ‘If I’m using that process, as an employer, is there discrimination that can creep into it anywhere?’”